1Termination (asynchronous thread control)¶

Termination is one of those interesting concepts that occasionally seem trivial (if threads terminate on their own), but issues may arise if a thread tries to terminate another.

We saw before that Thread.stop() and Thread.before() were fundamentally unsafe to use (async, could run into deadlock). There are also other functions we may want to use such as the idea of suspend() and resume() another thread, but those are unsafe as well; they provide a lot of ways to end up with deadlocks (or in an inconsistent state).

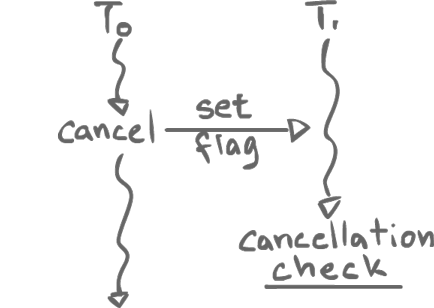

If we know our code is safe, a polling approach is more appropriate. That is, instead of having Thread 1 suspending Thread 2, Thread 1 will ask Thread 2 to suspend, and only once Thread 2 is in a suspend-able state, it suspends itself. A thread sets a flag for another to check.

1.1PThreads Cancellation¶

There are a few mechanisms for termination by polling. One that is quite complete is the one seen in PThreads which has the idea of cancellation.

PThreads adds further complexity to this. Coming from C, there is the idea of a cleanup handler: a stack of functions which can be pushed on a thread that execute once it terminates. At the cancellation point, if thread termination occurs, all the cleanup handlers are called.

pthread_cancel()- Posts a cancellation request.

The cancellation points are supposed to be at known safe places. When waiting at a condition variable, or when joining a thread are such points. Some OS calls such as sleep(), open(), read(), semaphore_wait(), or some IO control, the ones that return EINTR, are also points where a cancellation check will occur.

test_cancel()- Explicitly checks for cancellation.

Nb: mutex_lock is not a cancellation point, despite the fact that it makes the thread wait.

In PThreads, we can also enable and disable cancellation (it is enabled by default). If cancellation is disabled, cancellation requests are not ignored, they actually remain pending. The cancellation flag will actually be honored once cancellation is enabled again.

1.1.1Cancellation behavior¶

PTHREAD_CANCEL_DEFERRED is the default behavior that is described above. However, it may be changed to PTHREAD_CANCEL_ASYNCHRONOUS where we end up with something along the lines of Thread.stop() where threads are suspended instantly. Still, cleanup handlers will get executed.

1.2Building cancellation in Java¶

The core idea of cancellation is to have a boolean flag that announces cancellation, and have the thread check for it at the appropriate moment. Java does not have a mechanism for cancellation built in, but it is trivial to implement it ourselves. However, the real complexity of cancellation is [something about wake states?].

While Java does not have cancellation, it does provide Thread.interrupt().

Thread.interrupt()- Posts an interrupt. A flag variable is set to signal interruption.

Then, whenever the thread tries to enter such a function as wait(), sleep() or join(), it sees the flag and throws an InterruptedException. Just like in PThreads, it is not checked when acquiring a lock.

Thread.interrupted()- Asks if interrupted (and cleans the flag if so).

What we do if a thread is interrupted (or when an InterruptedException is caught) is up to us. We could leave the program, or ignore it altogether.

2Barriers¶

We may have a point in a concurrent program where all the threads, or a subset of threads, should meet before continuing.

computeNextFrame(); // Compute the next animation frame using multiple threads // There should be a barrier draw(); // The drawing cannot continue until all the threads are done with their computation

There is more than one way to solve this. For example, we could create new threads and join them all, and we get a barrier. However, the overhead of creating/destroying and joining threads is likely to be unacceptable.

2.1Simple barrier for n threads (one-shot)¶

volatile int numTreads = n; // All threads -> Work <- fetch_and_add(numThreads, -1); Decrement the number of threads while (numThreads != 0) Thread.yield(); // Spin until all the threads are done

This is an n-thread barrier, and it works, but it cannot be reused. We could try and fix this in a simple way:

volatile int numTreads = n; // All threads while (true) { -> work <- if (FA(numThreads, -1) == 1) numThreads = n; // The last thread shall reset the barrier else while (numThreads != 0) Thread.yield(); }

This does not work however, because the thread which resets the counter may do so before all the threads have gotten out of their spin lock.

In order to have a proper reusable barrier, there should be two components. Firstly, we have to see that all the threads show up, but secondly, before it is reset, all the threads should have left. One way to do it is to have two of the simple barrier seen above, but there are nicer ways.

2.2Reusable Barrier (sense-reversing barrier)¶

There is a boolean variable that tracks of the phase (odd, or even) to essentially have two barriers in one.

volatile boolean phase = true; // odd or even for the barrier volatile boolean phases[n]; // odd or even for the threads -> defaults to false volatile int numThreads = n; // All threads while (true) { -> Work <- if (FA(numThreads, -1) == 1) { // The last thread switches the phase numThreads = n; phase = phases[id]; } else { while (phase != phases[id]) Thread.yield(); // Spin on the phase } phases[id] = !phases[id]; // Now waiting for the next phase }

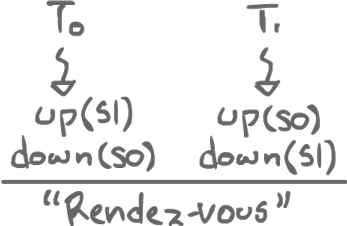

Nb: There is special case of a reusable barrier which involves only two processes/threads (seen a few lectures ago) implemented with semaphores.

When there is only two processes/threads, it is known as a rendez-vous point.

3Priorities¶

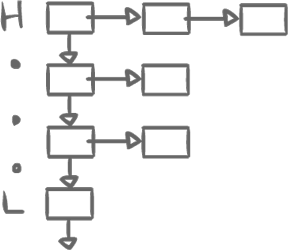

We know about the priority preemptive model. We have a list of lists of threads, going from high to low priority, scheduling things in round-robin favor. However, it is really to find ourselves in a bad situation using this scheme when we force a high priority on some thread or process.

Also, different contexts have different priorities, and how we map different priority scheme to each other is completely arbitrary.

PThreads has FIFO and RR, both of which can guarantee to have at least 32 priorities (even 127 or more in practice) and OTHER (which has whatever number of priorities it may have). The priority model inside of these priority schemes are local; they may overlap, but comparing between models is not defined.

Java properly defines priorities between 1 and 10, which we can rely on. However, virtual machines are implemented using system-specific mechanisms. That is, it will still use PThreads under the cover on a Unix system, using whatever scheme is available. There may be more priorities available (in which case there will be leftover priorities in-between), or there may not be enough. As a matter of facts, Windows NT merely has 7 priorities.

3.1Priority Inversion¶

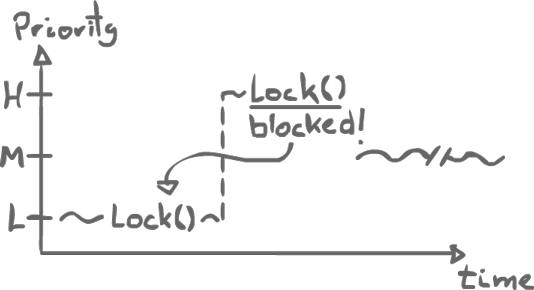

Priority inversion is a well-known and classic problem. The idea is that lower priority executing threads may be executed in favor of higher priority executing threads, despite the fact it should be the other way around. It is not a flaw of the priority system itself, it actually shows up due to locking.

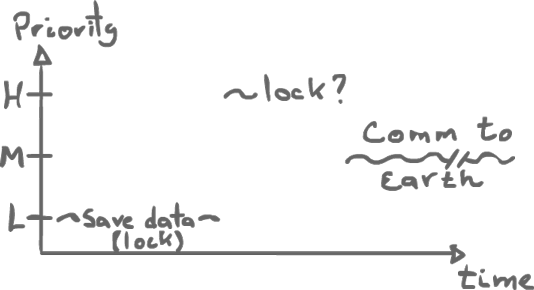

L starts executing and acquires a lock. Then, H starts, taking priority over L, and tries to acquire the lock, but L still has it, so it blocks. Then, M starts executing, taking priority over L again. For as long as M is executing, L cannot continue to execute and release its lock which in turn prevents H from executing.

3.1.1Mars Pathfinder example¶

There was a problem with the rover in that it kept resetting all the time for obscure reasons. The problem turned out to be due to priority inversion.

There was an info bus in the rover that had to be locked to be used properly.

H: Bus-manager

M: Communication with Earth

L: Gather data

The exact same problem as above was happening. When L was done gathering data and had to lock the bus to store it, H had to wait for it to be unlocked, but M would initiate a lengthy communication back to Earth, preventing L from releasing the lock, which in turn prevented H from executing.

3.1.2First solution: Priority Inheritance¶

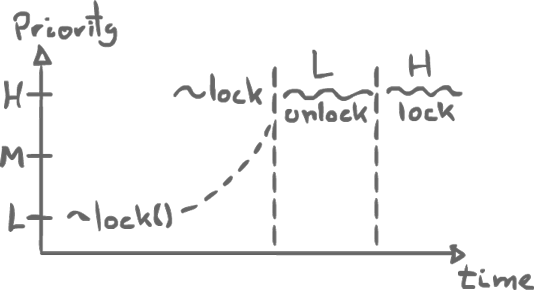

There are two ways to try and fix the problem of priority inversion.

When a low priority threads acquires a lock, it could temporarily be given the priority of the highest priority thread waiting on it. In effect, the highest priority ends up being associated with a lock.

That is what they used in Pathfinder.

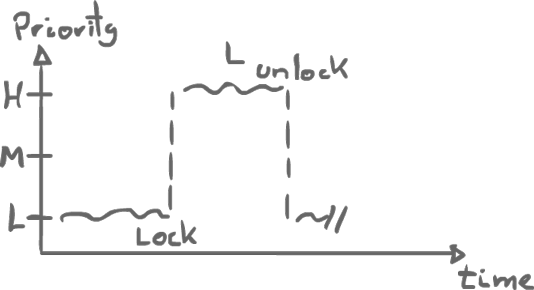

3.1.3Second solution: Priority Ceilings¶

A priority is statically associated with a lock. Then, when a thread acquires a lock, it gets raised to the priority associated with that lock.

4TSD (also known as TLS)¶

TSD and TLS are two different acronyms for the same idea.

Inside a concurrent program, local variables (stack or registers variables) are also local to threads; they are thread-specific. That is, each thread has its own copy. If a Thread has a local variable x, then each instance will read and write its own copy.

Global variables, are shared; a thread can see the reads and writes of another. Sometimes, however, it would be convenient if those global variables could be thread-specific as well, in the sense that, through the same syntax, threads could access global variables that are actually (somehow) thread-specific. Aka,

one global shared symbol name, but thread specific values.

For example, in C, there is a global variable called errno. This variable gives access to the last error code that occurred after a system call.

x = read(); if (x < 0) // Something went wrong, what was it? printf("%d\n", errno);

This works fine in a single threaded context. In a multi threaded context, however, the issue that a global variable holds a thread-specific value arises. For example, if Thread0 does the above and Thread1 does similarly (y = read(), but with a different file), it is possible for Thread0's read to fail while Thread1's succeeds. Depending on the interleaving, it is possible for errno to hold no error code at all for Thread0 to inspect because of Thread1's success.

4.1Java's TLS: Thread Local Storage¶

What we need is a global variable amongst threads that does not confuse thread-specific values. Java gives the Thread Local Storage (TLS). To use it, we must create a TLS instance which becomes the global variable. All the threads can access (read and write) the same object, but they all have distinct storage in the object.

class foo { public static x = new ThreadLocalStorage(); ... }

After that, Thread0 could set something (x.set(7)) while Thread1 sets something else (x.set(8)), but as expected, each threads will retrieve their own respective values using x.get().

We can also implement TLS by ourselves:

/** * This is not the way Java does it, but it works */ class ThreadLocalStorage { private HashTable<Integer, Object> ht = new HashTable<Integer, Object>(); public void (Object x) { ht.put(Thread.currentThread(), x); } public Object get() { ht.get(Thread.currentThread()); } }

4.2PThreads' TSD: Thread-Specific Data¶

PThreads has something similar to TLS: Thread-Specific Data (TSD). The idea is the same (key, value pair), but the key is not the thread id. Rather, data is stored by indices, but each thread has its own copy for the same key:

| Table | k1 | k2 | k3 | ... |

|---|---|---|---|---|

| T0 | 0 |

... | ... | ... |

| T1 | ... | ... | ... | ... |

| T2 | 1 |

... | ... | ... |

If Thread0 accesses k1, it gets 0, but if Thread2 accesses k1, it gets 1 instead.

pthread_key_create(&key, destructor);&keyis a pointer to the variable the key will be stored in.destructoris a destructor function that will be called once the thread is terminated in order to clean up all the non-null TSD data.

Nb: The destructor might create more TSD (it is a pointer to a function which could do anything, really). We still want to clean it up, so the destructor is iterated until no data is left. PTHREAD_DESTRUCTOR_ITERATIONX_MAX holds the value of the maximum number of iterations.

4.3What about errno?¶

This is a special case. It can be accessed like any global variable, despite the fact it is actually TSD.

x = read() -> if error, set global variable errno to the error if(x < 0) print errno

if multithreaded

T0: x = read if(x < 0) print errno T1: if(x < 0) print errno