1Blocking¶

1.1Spin-locks¶

So far, we have used spin-locks for locking. They have the disadvantage that waiting is still active. That is, spinning (checking the variable over and over) consumes CPU time and wastes energy.

1.2Blocking solutions¶

Blocking solutions, on the other hand, perform much better. Rather than spinning, they work by letting a thread sleep if it is unable to enter a critical section. The thread currently in the critical section needs to wake up the sleeping thread when it is finished.

This is a good solution, but it takes a little bit of effort for this to work properly. Doing blocking naively does not work well:

// Thread 0 if (critSection.isOccupied()) sleep(); // Thread 1 -> In critical section -> Finish for (Thread t: sleepingThreads) t.wakeUp();

This will not work as, when using this kind of solution, the "lost wake-up problem" is easy to encounter:

if (isOccupied()) { unlock sleep // if another thread does: if (anyoneSleeping()) {wake}, it then misses the wakeup lock }

Say Thread1 is in the critical section. Then, Thread0 does the check if (critSection.isOccupied()) and sure enough, it sees that Thread1 is in the critical section, but before it even goes to sleep();, Thread1 finishes its job in the critical section and goes to wakeUp(); all the other threads, excluding Thread0 which has not yet inserted itself in the list. Then, Thread0 finally goes to sleep, risking to sleep forever.

2Semaphores¶

To solve the problem of lost wake-ups, Dijkstra, back in the 60s, came up with semaphores, one of his major contributions.

Semaphores and monitors are quite important; they are the most commonly used concurrency controls.

A semaphore is a positive integer counter s, along with two atomic operations:

void P(Semaphore s) { // Also called "down" or "wait" while (s == 0) sleep(); s -= 1; } // Atomic void V(Semaphore s) { // Also called "up" or "signal" s += 1; for (Thread t: sleepingThreads) t.wakeUp(); } // Atomic

Nb: "P" and "V" come from "wait" and "signal" in Dijkstra's native Dutch.

These two operations are guaranteed by the operating system to be atomic; the scheduler knows it should never interrupt any of those operations.

One analogy that is often used to explain the concept is that of a bowl with tokens in it. In order for a thread to compete for a critical section, it has to take a token. If the bowl is empty, then it cannot take a token, and it cannot enter the section. However, someone else could come along and put their token back. It then wakes up any other thread sleeping to tell them there is a token, and the first to get to it in time takes it. For anyone else, the bowl will still be empty, so they will go back to sleep.

If more than one thread is sleeping, deciding which one(s) to wake up is actually arbitrary. Ultimately, since P and S are atomic, only one thread can acquire a token. Any other thread will stay stuck in their while (s == 0) sleep(); loop.

2.1Examples¶

Semaphores simplify many concurrent programs.

2.1.1Binary Semaphore: Mutex¶

- Binary semaphore

- A semaphores where

sis either0or1. This is commonly used in what is called a mutex. - Mutex

- A binary semaphore where

sstarts a1.

Mutexes are a convenient way to make sure that only one thread can access a critical section at once.

Ownership: whoever decreases to 0 owns it and does the increasing later on.

2.1.2Signalling Semaphore: Join¶

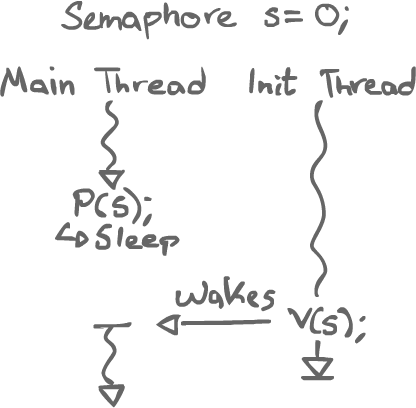

A binary semaphore can be used to force one thread to wait for another. If the binary semaphore starts at 0 instead of 1, it is called a signalling semaphore.

For example, a main thread could wait for an initialization thread to finish.

2.1.3Signalling Semaphores: Barrier (optional)¶

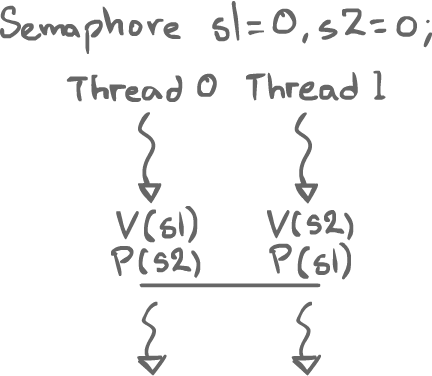

Another thing we can do is what is called a barrier, formed with two signalling semaphores. Let s1 = 0; s2 = 0;. When both of them signal the other, then we have a situation where both threads are waiting until the other one is there as well. That is, none will pass the barrier point until both is at their respective barrier point.

Two threads are waiting for each other. Neither progresses until the other gets in.

Nb: This kind of thing often appears on midterms/final. They are easy test questions, but tricky to get the logic right. It is therefore a good thing to understand them well.

2.1.4Counting Semaphore: Producer/Consumer¶

The general form of a semaphore where s is just a positive integer is also called a "counting semaphore". It is nice for representing resources.

Why would we want more than one thing to enter a critical section at a time? There is a lot of problems where this can be used. One of them is a classic problem called the Producer/Consumer problem also known as the bounded-buffer problem.

There are 2 threads. One is the producer, which produces data and stores it in an array of fixed size. The other thread, the consumer, consumes the data by removing it from the array. There are two specific problems:

1) The producer should not overflow the array.

2) The consumer should not consume from an empty array (underflow).

Data buffer[n]; Semaphore spaces = n; Semaphore filled = 0; int prodIndex = 0; int consIndex = 0; // Producer while (true) { Data d = produce(); P(spaces); // Wait if the array is full buffer[prodIndex] = d; prodIndex = (prodIndex + 1) % n; // Adding to the tail of a circular array V(filled); // Signal the consumer that there is data } // Consumer while (true) { Data d; P(filled); // Wait if there is no data d = buffer[consIndex]; consIndex = (consIndex + 1) % n; // Popping from the head of a circular array V(spaces); // Signal the producer that there is space left consume(d); }

If the producer is much faster than the consumer, then it will sleep once it fills the array. Likewise, if the consumer is much faster than the producer, then it will sleep once it consumes all the data.

3Monitors¶

Semaphores are not magic; they do have drawbacks. It is easy for the programmer to miss a P() or a V(). If such a function call is left out somewhere, then the whole program could fall apart, possibly in non-obvious ways. It is thus really hard to debug, making multithreaded programming of the hardest things to debug in computer science.

One of the reasons why it is so hard is that semaphores may encapsulate two distinct ideas: mutual exclusion (a form of resource consumption), and signalling (for thread communication). These concepts are easy to grasp, but making a mistake makes it hard to find.

To fix this, Per Brinch Hansen, another well known computer scientist, and Dijsktra both came up with the idea of monitors. They pretty much developed their idea of a monitor independently, with slightly different way to do it. We will be using Hansen's approach.

(Java has pieces to make monitors).

Monitors are one of the main concurrency controls. With both semaphores and monitors, we can basically solve all the concurrency problems we can come up with. It's just a question of how to use them to accomplish what we want to accomplish.

The idea is to package shared data together with the operations. We end up with an abstract data type called a monitor. All the procedures (methods) in a monitor are mutually exclusive. Furthermore, they are all protected by the same lock.

None of the shared (protected) data in a monitor should be visible outside of the monitor. Would the data be visible outside of the monitor, then no matter what the monitor did to enforce mutual exclusion, something outside could see the data and possibly corrupt it.

class Monitor { private Data sharedData; synchronized method1() { // Does whatever it does } synchronzied method2() { // Does whatever it does } }

The synchronized keyword basically says that as soon as the method is called, the caller must first acquire a lock respective to the object. As such, anything that uses a method on a monitor must try to acquire the monitor's lock. If it can, it proceeds, if it cannot, then it has to wait.

What about the signalling part? To do this, monitors use a special atomic construct called a condition variable (CV). CVs are always associated with monitors. A CV has 2 operations: sleep() (also called wait()) and signal() (also called notify()). They encapsulate the idea of signalling with other threads; all they do is communicate with other threads.

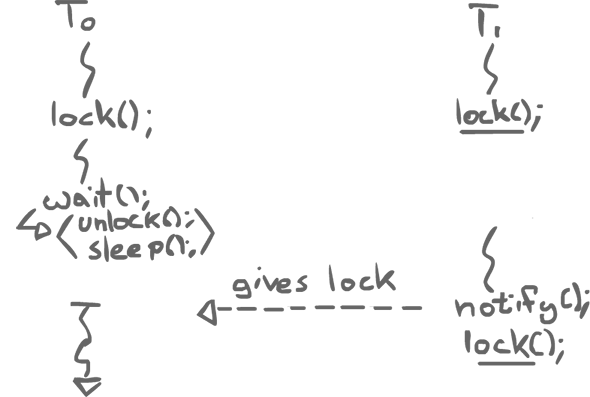

Once a thread is inside a monitor, it means it is holding a monitor's lock. A thread may wait() on a CV. This means that it releases the monitor's lock and goes to sleep, even though it is inside a synchronized method. This allows another thread to acquire the monitor's lock and enter a monitor's method.

The second thread then notify() the thread waiting on a CV. That thread then wakes up, but before it can continue, the notifying thread must leave the monitor, hereby releasing the lock for this thread to acquire.

// Thread 0 synchronized method1(x) { // Locks the monitor if (...) x.wait(); // Unlock the monitor, go sleep, lock, all atomically (or else someone would creep in before `sleep`, checks no one's sleeping, then bla // ... // Thread 1 synchronized method2(x) { // The monitor is locked, T1 cannot enter yet // But once T0 calls wait(), it can enter // Then, T1 acquires the lock // At one point, it calls... x.notify(); // which wakes thread waiting if any (hopefully T0). No control over who wakes up though // to wake up all threads waiting on x: x.notifyAll() // Finally, Thread 1 exits } // thus releasing the lock // Thread 0 // ... // Wakes up in the same method // Continues } // Finishes and releases the lock

Both of these areas, inside the `synchronized methods, are critical sections, but one thread can actually pause (wait on a condition variable), and let another thread inside the monitor. Only one thread is doing something at a time, but different threads can still share critical sections. This gives much finer grain control over the critical sections than when using semaphores because signalling and mutual exclusion are now two separate things.

How does it work? The monitor has a queue MQ of threads waiting to acquire the monitor lock. Each CV (there can be more than one) has a queue CVQ of sleeping threads. Say a thread T wants to use a monitor's method. If no one else is in the monitor, then T is let inside. Otherwise, T is added to MQ and is put to sleep.

void enter(T) { if no one in the monitor -> enter otherwise -> add to MQ } void wait(T, CVQ) { // Adds T to CBQ // Release the lock an put T to sleep } void notify(CVQ) { // Take a thread from the CVQ and put it into MQ // It does not wake it up, but merely moves it } void notifyAll(CVQ) { // Move all the threads in CVQ, and put them in MQ } void exit() { Take a thread T out of MQ and wake it up }

All of these four operations are atomic.

Nb: There is no guarantee of fairness. The queue may even be a set (not FIFO). How can we control which thread is woken up? We cannot; there is no easy to do it. As such, instead of doing if (B) wait();, we have to do while (B) wait();. This means that if a thread is unnecessarily woken up, it goes back to sleep.

So:

- not fcfs

- if there are multiple threads waiting...

if (B) wait()=>while (B) wait()

3.1Spurious (false) wake ups¶

This is important for another reason: spurious wakeups, a sort of weird and extremely uncommon thing, but it is possible in both Java and C++ that a thread may wake up without having been notified. We cannot assume that a thread is woken up because it was necessarily notified. All the more reason to double-check whatever condition inspired a thread to sleep in the first place.

3.2Review of Monitors¶

Semaphores have the disadvantage that they wrap up two separate ideas, signalling along the actual mutual exclusion. A solution to that is the idea of monitors, an abstract data type that gives mutual exclusion and a separate construct, the condition variable, which can be used for signalling.

A condition variable is really just a separate waiting queue. In Java, there is a single unnamed condition variable in every object, just like any object has a single unnamed lock. The Object class has the wait() and notify() methods available to use the condition variable. They can only be used within a synchronized block or method, or they will throw an Exception. PThreads has a separate condition variable that must be associated with a particular mutex, so the work must be done by oneself.

Normally, waiting releases the monitor lock, and puts the thread to sleep until another notifies it, after which the lock can be reacquired to continue on. However, an interesting property of any form of wait, is that it may incur spurious wakeups. That is, it is possible for a thread to wake up for no reason. As such, instead of waiting in a if () {} block, what should be done is to wait in a while () {}, thus allowing to retest the condition and go back to sleep upon a spurious wakeup.

3.3Trivial implementation of a condition variable¶

In fact, if we allow things like spurious wakeups, we can actually implement wait and notify in a very trivial way.

void trivial_wait() { // Next two statements are not even atomic unlock(); sleep(random()); lock() } void trivial_notify() { // Nothing! }

This makes a waiting thread wake up eventually, anyway. As such, the problem of lost wakeups is not present and it is not required for the unlock and sleep to be atomic.

4Semantics of condition variables¶

There are different ways we can implement condition variables.

4.1SIGNAL_AND_CONTINUE¶

When notify() is called in a monitor, it tells another thread it should wake up, but the notifying threads still holds the lock and continues. This is the default in Java, PThreads, etc.

4.2SIGNAL_AND_WAIT¶

The notifier gives the lock to the woken thread. Having given the lock, it does not have it anymore. It must reacquire the lock to continue.

4.3SIGNAL_AND_URGENT_WAIT¶

The notifier lends the lock to the woken thread, expecting it back. Once the woken thread is done, it returns the lock to the notifier. This requires a recursive/stack-based implementation.

4.4Notes¶

SIGNAL_AND_CONTINUE is easy to see, we just move things inside a queue. SIGNAL_AND_WAIT is a little bit trickier because implementing it means transferring ownership of the lock. SIGNAL_AND_URGENT_WAIT ends up being even trickier because it requires a stack to let a notified thread lend the lock to another thread.

Interestingly, implementing SIGNAL_AND_WAIT given SIGNAL_AND_CONTINUE is easy. Similarly, SIGNAL_AND_URGENT can be implemented given the other two. In fact, all the semantics are equivalent. Implementing the other two semantics with SIGNAL_AND_CONTINUE is left as an exercise.

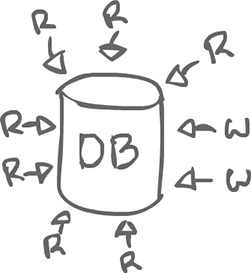

5Readers and Writers¶

There is some database with many threads/processes coming in, trying to access the database. Most accesses are reads, which do not interfere with each other; there is no need for synchronization. A problem shows up once an access is a write. Writers need mutual exclusion, but readers do not!

5.1First solution: Readers' preference¶

We will treat the Readers as a group rather than individual entities, contrary to the Writers. There will be mutual exclusion between individual Writers and the group of Readers.

// Using semaphores int readers = 0; BinarySemaphore r, rw; // Init to 1 // Readers while (true) { down(r); // Acquire the reader lock readers++; // Increment the count of readers if (readers == 1) down(rw); // If we are the first reader, grab the writer lock up(r); -> Read <- down(r); readers--; // Decrement the count of readers if (readers == 0) up(rw); // If we are the last reader, release the writer lock up(r); } // Writers while (true) { down(rw); // Grab the writer lock -> Write <- up(rw); // Release the writer lock }

The problem with that solution is that readers can maintain control as long as new readers keep showing up. A writer might show up, but as long as the group of readers have the control of the database, no writer can access it. It is a weak solution.

5.2Second solution: Writers' preference¶

// Using a monitor and condition variables class RW { private int nr; // Number of readers private int nw; // Number of writers private int ww; // Number of waiting writers void reader() { synchronized(this) { while (nw > 0 || ww > 0) wait(); // Wait if there is a writer, or any writer is waiting nr++; } read(); synchronized(this) { nr--; notifyAll(); // Wake waiting writers (well, all the threads really) } } void writer() { synchronized(this) { ww++; while (nr > 0 || nw > 0) wait(); // Wait if there are some readers, or a writer ww--; nw++; } write(); synchronized(this) { nw--; notifyAll(); // Wake waiting readers (well, all the threads really) } } }

Nb: If a writer shows up, readers must wait. If writers keep showing up, readers may starve, but considering reads are usually more common than writes, it is not necessarily much of a problem. Still, a fair solution would be really nice to have.

5.3Third solution: Fair solution¶

int nr; // Number of readers int nw; // Number of writers int ww; // Number of waiting writers int wr; // Number of waiting readers mutex e; conditionVariable okRead; // To signal the readers ConditionVariable okWrite; // To signal the writers // Reader lock(e); if (nw > 0 || ww > 0) { // This 'if' is wrong. It is left as an exercises to fix it wr++; wait(e, okRead); } else { nr++; } unlock(e); -> read <- lock(e); nr--; if (nr == 0) { if (ww > 0) { // Let in a single writer ww--; nw = 1; signal(okWrite); } } unlock(e); // Writer lock(e); if (nr > 0 || nw > 0 || wr > 0) { // Again, the 'if' is wrong ww++; wait(e, okWrite); } else { nw++; } unlock(e); -> write <- lock(e); nw--; if (wr > 0) { // Let in all the readers nr = wr; wr = 0; signal_all(okRead); } else if (ww > 0){ ww--; nw++; signal(okWrite); } unlock(e);